The Ferry to Hogwarts

Alas there seems to be no ferry service to anywhere from this pier, though a block away is the ferry to one of San Francisco's most famously dubious tourist attractions, Alcatraz. (Possibly relevant note: The gap in my posting here was due to a stubborn head cold, not incarceration.)

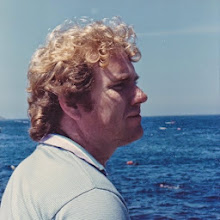

Nor is there any profound justification for posting this image, beyond the general Rule of Cool. But it provides a handy segue to an ongoing topic of this blog, the somewhat uneasy boundary line between Realism [TM] and Romance.

Such border disputes are by no means confined to outer space, but space is a particularly productive environment for them, because the whole idea of going into space for any reason is essentially and profoundly Romantic. Yes, comsats, weathersats, and various other things we have sent into space have their practical uses, but it seems awfully unlikely that strictly practical people would ever have come up with them, given how absurdly difficult and costly space travel is.

Yes, before space travel there was military rocketry. But - setting aside the question of in what sense our blowing each other up qualifies as practical - the established or foreseeable roles of military rocketry in the early 20th century did not point toward space boosters. Practical military rockets like the Katyusha were essentially self propelled shells, more expensive and less accurate than standard shells, but able to be fired from cheap, lightweight launchers instead of heavy, expensive artillery rifles.

The V-2 was, in the pre-atomic age, a supremely impractical weapon: an expensive and inaccurate way to lob a shell not all that much farther than the longest-ranged guns of the time could achieve. No one would have come up with such an idea on purely military grounds. I'll guess that Versailles restrictions played a role in making the German army interested in alternatives to conventional artillery, but it was the first generation of space geeks, not military specialists, that put long-range rocketry into play.

Yes, nuclear warheads made ICBMs all too practical, but it is no accident that the first generation of ICBMs, both US and Soviet, turned out to be much more suitable as space boosters than as weapons.

Space travel is, like the image above, ultimately all about the Rule of Cool, AKA Romance. This has significant implications. As strong as are the practical reasons for not spending zillions on it, these reasons have not, so far, succeeded in making the whole silly thing go away. Unless post industrial civilization removes itself from the social selection options, it will probably not go away in the midfuture, either.

A comparison can be made here to other Zeerust-era future techs, such as the SST. Supersonic aircraft are also inherently cool, but not that cool. So, not only do SSTs fail to offer enough merely practical benefits to pay for their development cost, they also fail to offer enough coolness to overcome that limitation.

I suspect that people will walk on Mars before airline passengers (again) travel at supersonic speeds.

It may be somewhere between paradoxical and hypocritical for me to turn around and argue this point, considering how much time I spend here beating up on popular space tropes. But I beat up on the PSTs so you won't have to. Romance, in and of itself, need not apologize to realism for anything, but the minor sub-branch of Romance that decks itself out as hard SF has a certain obligation to fake it convincingly, including space futures that sustain at least the illusion that they were invented in this century.

The image was snagged from Google Maps. And here is a genuine example of mysterious British transportation signage. Can anyone here elucidate the meaning?

262 comments:

1 – 200 of 262 Newer› Newest»I'm still not sure I buy the idea that space travel is fundamentally a silly and impractical thing.

This planet at the end of the day is still a finite object. There is finite living space, finite outlets for social dissidents and most importantly a finite amount of time before one of any number of reoccurring cosmic or geologic (or human caused) calamites does it’s level best to scrape us off of this planet for good.

In the long run getting a self sustaining industrial society in space up-and-going means freeing ourselves from the Earths inherent limits and opening up an effectively infinite future.

That strikes me as an extremely practical thing to work towards.

Nick P.:

"This planet at the end of the day is still a finite object. There is finite living space,"

Yet enough that we already have more people than we know what to do with. And we aren't even making ideal use of the living space we have.

Meanwhile other places have very little living space until we terraform them.

"finite outlets for social dissidents"

There are already more wildly different cultures on Earth than there are viable targets for colonization in the solar system. Just how much will some extra living space really help us, especially when you probably need to be sponsored by a major government to get there?

"and most importantly a finite amount of time before one of any number of reoccurring cosmic or geologic (or human caused) calamites does it’s level best to scrape us off of this planet for good."

The only one I'm really seriously worried about is when the sun turns into a red giant some five billion years from now. That's over four orders of magnitude larger than the total history of Homo sapiens, and over seven orders of magnitude larger than the span of industrial civilization, so I think it's plenty of time for us to figure out FTL travel - or, if the laws of physics insist on being difficult, interstellar STL travel. (If you're worried about trip times, it's also plenty of time for us to figure out immortality.)

Recovering from a nuclear war or a dino-killer meteor smack is bound to be not much more difficult than terraforming an airless ball of rock. And it would require a very large disaster to ruin all higher civilization worldwide. If, say, all of North America were to suddenly sink under the sea, anywhere from Britain to China to Australia (not meant as an exhaustive list!) could end up taking over the reins. It'd certainly be an economic blow, and some valuable data would be lost, but we'd still have modern technology and plenty of first-world nations to produce it.

I disaggree on the idea of SST's coming back into existence after the colonizationn of mars. How else to get humans to LEO than without a hypersonic transport with rocket boosters? look at SpaceShipTwo. Multiply that by 100.

Its not totally out of the question. NASA has never had a need to develop low cost human to orbit systems because they have an almost unlimited budget. At lets say 80 miles altitude, not terribly far up, there is a transfer between the SST and a ferry craft to higher orbit, where a NERVA shuttlee takes them to Mars. An obvious spinoff is the development of a downgraded version that can only go up 20 miles, but still can easily hit mach 5.

A side note on these transports: mach 5 is about a mile per second. thats 1.6 kps. that knocks an elliptical LEO down to only 4.9 kps more. For most chemical rockets, thats nothing.

It is all about setting the bar at the right height to begin with. James Bond movies do this with a ridiculous action scene right at the beginning. The rest of the movie is more believable by comparison. If you cant take the ridiculous nature, you're out a few minutes and can walk out the door. The same applies to space travel and romance. If you stick to the default bar height, Reality (tm), then your believable romance amounts to project managers stabbing each other in the back for corporate funding. If instead you start the story with "popping out of FTL", you've set the bar MUCH higher.

I think space travel, to the degree we've actually done it -- with the exception of sending people to the Moon -- has been about as practical as it has any right to be. The manned Moon landings were a bit of potlatch, motivated by a strategic competition that, in the shadow of nuclear weapons, was struggling to find means of expression. Otherwise, our space endeavors do have reasonable economic motivations.

Military rocketry did contribute to the rapidity of space development, but not to the reasons behind it. And even if there hadn't been ICBMs, there's every reason to believe that military space lift would have been developed to the same degree for the purpose of emplacing spysats, weather satellites, comm satellites, and ultimately GPS. And after you have military space lift, you get commercial and scientific activity piggybacking on the technology.

Where Romance must meet reality is in the near future. Several centuries down the road, have any set of conditions you want, as long as it's internally consistent. Just don't forget that you're then dealing in science fantasy, not hard SF. Just like swords and sorcery, just because you can imagine it, that doesn't mean it's possible.

But in the relatively near future, as has been said, there has to be a plausible way from here to there. So you have to start with the realization that all of the classic rocketpunk motivations are out the door. You need to make up motivations and settings that sound plausible knowing what we know today.

WRT the airport sign, I'm thinking it means that the seats are for travellers and their friends/relatives only. There's no other interpretation of public -- even in British English -- that makes any sense.

Getting back to the ideas of SST's and other post war techs (all born about the same time as the Rocketpunk trope), I would suggest they are mostly victims of linear extrapolation, as discussed in an earlier post.

Prop planes made of canvas and wire become metal prop planes, become turbo compound "Super Constellations" then jet airliners. Supersonic jet airliners seemed to be the next step, but in reality, airline technology hit the top of the "S" curve with the Boeing 707.

The only way to break the speed barrier without insanely complex materials science, cooling systems or fuel consumption is to exit the atmosphere and friction, and do a suborbital boost in a SSTO or equivalent machine. Power on boost to the edge of the atmosphere, 30 minute coast and power on deceleration and landing. Overall, a much sipler flight regime, and a machine like the DC-X could be scaled up to perform if the market for hypersonic rides across the planet existed. If the need is to get places fast without reference to means, MAGLEV trains in evacuated tubes might also do the job for a far lower cost (amortized over a larger number of passengers). Notice we have actually bypassed the "airplane" trope.

Getting from the current situation to the Rocketpunk Universe will probably require one big leap away from rocket boost to orbit; the only problem is there are no obvious candidates whith the combination of technical feasibility and cost that can do the job today or in the near future.

Just a quick comment: Humans have an instinctive need to explore, expand, and settle somewhere new...it may be left over from the need to migrate away from dangerous places, or simply spread out it ensure specise survival, but whatever the reason, humans do have a need to 'go over the mountain'...that, in and of itself, is motive enough to colonize Mars or Titan, or wherever.

Ferrell

Ferrell:

"...that, in and of itself, is motive enough to colonize Mars or Titan, or wherever."

Not quite. It's motivation enough to want to places in the solar system, and eventually to other stars. In human history, that motivation, combined with a few resources or a big enough threat, was enough. In the future case of leaving Earth, the vague idea that going places is a good thing is not matched by the availability of resources or threat. It's just too expensive to make the move for simple itchy feet to justify it.

Yes, but it is the 'itchy feet' that motivates people to find (or invent) a justification to go there. People have been doing that for millenia and I see no indication of that changing in the foreseeable future. While economic justifications are prefered by many, those aren't the only 'reasons' to move far away to start a new life; even going to other worlds, economics aren't going to be the only justification used for acting on that 'itchy feet feeling'...and it is that small group of people who act on that feeling that are going to come up with a (or set of) justification(s) unique to themselves...just like people have always done. And yes, colonizing another world will take an unpresidented amount of resources and effort, but there will be some who go beyond just dreaming about it and will have the werewithall to actually do it; and after the first does it, others will follow.

Ferrell

Clarke: "The reason that the dinosaurs are extinct is that they didn't have a space programme."

Milo: Yup, nukes and dino-killers will have a job wiping out all humies, but a mutating bio-weapon might do the job.

Ferrell: Thanks for writing your words - actually put a lump in my throat... I get all sentimental about this kind of thing. :)

I'm sure that you all know my views on the matter [one of my art qualifications was based on the brief: 'Design and create a maquette for a statue in a museum of modern transport, in a Futurist style'... couldn't believe my luck, and the tutor laughed as she passed me the assignment: "Boy, there's a brief in there for *you*!"]

SST: I think that the main problem is that they can't be slamming their supersonic footprints into residential areas, so can only cruise at the speeds that they're optimised for over oceans.

They're very sub-optimal (ie inefficient) on take off/landing and transonic speeds, which might be a fair chunk of the flight time; routes that are mostly over ocean are pretty limiting in options/destinations; and it's best to not fly over the sea unless you otherwise have to, from a safety point of view.

It's the absence of tech that allows a supersonic plane to not generate a sonic boom that's the real killer... doubt that all of the accelerandos in the world will ever get round that one.

I guess that it will take a pretty huge resurgence in (national, most likely) pride; hypersonic capability; materials breakthroughs that allow them to not be such dogs in the subsonic regime; before we see them again.

tsz52:

"Yup, nukes and dino-killers will have a job wiping out all humies, but a mutating bio-weapon might do the job."

Left to their own devices with natural selection, they'd be more likely to mutate to be less lethal, rather than more. A disease doesn't prosper by killing off all its vectors. To really kill everyone, a bioweapon would most likely have to be designed to be 100% deadly from the start.

Interestingly, the Space part of space travel is getting easier all the time, a version of the M2P2 has been tested and discovered to provide protection against high energy solar radiation. http://nextbigfuture.com/2011/01/mini-magnetosphere-prototype-protects.html#more

This gives a high performance drive and reduces the mass of the spacecraft which needs to be devoted to shielding as well.

Sadly, we are still lacking an easy and inexpensive means of getting to orbit, and some rational reason for being there in the first place.

The difficulty in climbing out of Earth's gravity well can add to the story element of space colonies. Yes, there is a huge initial expenditure, but once that is done, fabrication can be done in space. Getting people into space would be very expensive, so they'd be expected to stay there for very long periods of time, perhaps even generations. It might be counter intuitive, but making cheap launch ability actually makes it less likely to have space colonies, since you could just commute to work easily.

I think you are very correct - this problem shows up a lot in SF - but I don't like your examples.

First, V-2. It *was* absurdly expensive, but it was also fairly accurate and had much longer range than any artillery. In addition, the largest guns of the time were confined to railways, while the V-2 could be transported on a trailer ("meillerwagen").

As far as SSTs, (or spaceplanes for point-to-point suborbital hops) I think that there will be a resurgence in the relatively near future. There are practical benefits (for the very rich) to very fast travel, while manned exploration of Mars offers no immediate tangible benefits that I know of. (Asteroid mining may be another story, however.)

In addition, the technical problems with SSTs may soon be solved (see the Quiet Spike and shaped sonic boom demonstrator for examples of work on sonic boom reduction).

Anyway, great point, and darn those transistors for making manned space stations unnecessary!

Citizen Joe:

"It might be counter intuitive, but making cheap launch ability actually makes it less likely to have space colonies, since you could just commute to work easily."

Only if in addition to cheap, the trips are also very short.

Welcome to a couple of new commenters!

I encourage 'anonymous' commenters to sign a name at the bottom of your posts (whether it is your real name makes no matter).

I don't actually think space travel is silly; if I did it would be even sillier for me to blog about it!

But as for 'practical,' we have found such reasons only after the fact - the basic technical requirements were already worked out, by space geeks, before anyone came with communications relay, etc.

Absent space geekitude, would rocketry have ever been developed to the degree, and in the direction, needed for space boost? The focus of traditional military rocketry was cheap & rugged, a la the Katyusha, a very different line of development.

On SSTs, it is all a matter of economics. There's a reason why the Virgin guy is putting money into suborbital tourism rather than an SST. People may pay $200,000 to pop into space. It is more doubtful that they would pay $100,000 just to get to Hong Kong faster. Air travel has become a utilitarian thing, even at the high end.

Eventually, given colonies and all that, space travel might become merely utilitarian as well, but a LOT of Romantic stuff has to happen to reach that point.

Right now, reaching space is something like trading with China by taking a caravan along the Silk Road. There was a possibility of vast profits to be made (enough so the Serenìsima Repùblica Vèneta became a major power acting as the European conduit to the road).

It can provide riches for people willing to invest the time and resources, and manage the risk, but for the rest of us, the amount of risk involved and the scale of resources needed is very daunting. The Silk Road was eventually outflanked by developments in shipbuilding (oceangoing caravels and accurate navigational maps and tools) . We will need to develop some analogous means to bypass rocket technology in order to achieve economies of cost, speed or scale.

Using space to reach for riches is similar at the current time to traveling to China via the Silk Road. If you had the resources, time and ability to manage risk, you could reap vast rewards (so much so the Serenìsima Repùblica Vèneta became a major power acting as the European conduit to the Silk Road).

The Silk Road was outflanked by the development of reliable oceangoing ships and accurate navigation tools, so by analogy we need to develop the equivalent of Caravels to bypass the silk road of rocket technology.

Rick:

"I don't actually think space travel is silly; if I did it would be even sillier for me to blog about it!"

It should be noted that for the sake of fiction, even things which are blatantly and unabashedly impossible in real life can still not be "silly", as long as you can write a good story about them. (Hence the fantasy genre.)

Thucydides:

Although I get the analogy you're making, in another respect I think the Silk Road is a poor analogy for in-system space travel. The defining characteristic of the Silk Road is that there were urban settlements along its entire length, and that no-one tried to travel its full length without pausing anywhere along the way. This is quite unlike rocket travel, where the only reasonable travel plan is to just go from point A to point B, without any intermediate stops.

An FTL civilization might develop a better Silk Road analogy, hopping from star system to star system while still taking quite some time to cross the entire galaxy.

Hi all, first reply here. But I do read every once in a while, and I love the discussions going on here. Since my usual venting place, the newmars forums, seems to be out of commision, I need to rant somewhere. Hope I don't intrude! ;)

First of all, getting off-planet may not be practical now, but that won't always be so. If only because earth's crust has a finite amount of reachable metals, we will get about going out there eventually. It may be with mass-produced cheap chemical expendable boosters, with cool fusion engines, or with an even cooler space elevator, but we'll get there, it can be done, and there IS an ultimate reason.

Second of all, we haven't gone there yet because we don't want to. Plain and simply put, all of Earht's nations space programs are a pitiful percentage of their gross national products. Where the money is, that usually means the real interest is. For example, I believe in Spain we would be exploring the asteroid belt by now if we spent in space the attention, money and effort we spend in soccer.

A space colony could start construction tomorrow, if it only depended on tech. We can't build a space elevator or fusion engines, but we could build launch loops if we really wanted to go to space now. Chemical engines could be made cheap enough. But even the mighty NASA is something like 0.5% of the federal budget, right?

Personally, I believe that someone will do it (establish themselves off-world, that is) before it is really necessary or desirable (by that meaning, probably, Earth is reaching a point where geometric economic growth is no longer possible), but not that much sooner. And it will be for some foolish reason like national pride or the fabled "spirit of frontier". Humans are like that. But what amazes me more is that at the very moment we do that (the sooner the better!), we are seeding a second nucleus of growing human civilization that isn't confined to the resources of a planet, but those of an entire solar system. That raises a compound interest problem whose implications stagger the mind. (Imagine what several trillion humans would do? I know I can't, and I know it isn't that far away)

On a different note, and actually straight in the middle of my specialty, SST's have "a couple" of more problems other than sonic booms. Like an insane fuel expenditure that they can't really charge to an average person and kills their supposed market. Remember, we are still using chemical fire here, and that has a finite efficiency. Aerodinamic heating also plays a role, as has been mentioned. Bottom line, they are too expensive for civilians to afford, and nowadays in the commercial aerodynamic world, fuel efficiency is the new rule of coolness anyway.

And sorry about the length, it came out longer than intended... must be the forum withdrawal syndrome.

Hi all, first reply here. But I do read every once in a while, and I love the discussions going on here. Since my usual venting place, the newmars forums, seems to be out of commision, I need to rant somewhere. Hope I don't intrude! ;)

First of all, getting off-planet may not be practical now, but that won't always be so. If only because earth's crust has a finite amount of reachable metals, we will get about going out there eventually. It may be with mass-produced cheap chemical expendable boosters, with cool fusion engines, or with an even cooler space elevator, but we'll get there, it can be done, and there IS an ultimate reason.

Second of all, we haven't gone there yet because we don't want to. Plain and simply put, all of Earht's nations space programs are apitiful percentage of their gross national product. Where the money is, the interest is. For example, I believe in Spain we would be exploring by the asteroid belt by now if we spent in space the attention, money and effort we spend in soccer. Even the mighty NASA is something like 0.5% of the federal budget.

Personally, I believe that someone will do it (establish themselves off-world, that is) before it is really necessary or desirable (meaning, probably, Earth is reaching a point where geometric economic growth is no longer possible), but not much sooner. Humans are like that. But what amazes me more is that at the very moment we do that (the sooner the better!), we are seeding a second nucleus of growing human civilization that isn't confined to the resources of a planet, but those of an entire solar system. That raises a compound interest problem whose implications stagger the mind. (Imagine what a trillion humans would do? I know I can't, and I know it isn't that far away)

On a different note, and actually straight in the middle of my specialty, SST's have "a couple" of more problems other than sonic booms. Like an insane fuel expenditure that they can't really charge to an average person and kills their supposed market. Remember, we are still using chemical fire here, and that has a finite efficiency. Aerodinamic heating also plays a role, as has been mentioned. Bottom line, they are too expensive for civilians to afford.

Hi all, first reply here. But I do read every once in a while, and I love the discussions going on here. Since my usual venting place, the newmars forums, seems to be out of commision, I need to rant somewhere. Hope I don't intrude! ;)

First of all, getting off-planet may not be practical now, but that won't always be so. If only because earth's crust has a finite amount of reachable metals, we will get about going out there eventually. It may be with mass-produced cheap chemical expendable boosters, with cool fusion engines, or with an even cooler space elevator, but we'll get there, it can be done, and there IS an ultimate reason.

Second of all, we haven't gone there yet because we don't want to. Plain and simply put, all of Earht's nations space programs are a pitiful percentage of their gross national product. Where the money is, the interest is. For example, I believe in Spain we would be exploring by the asteroid belt by now if we spent in space the attention, money and effort we spend in soccer. Even the mighty NASA is something like 0.5% of the federal budget.

Personally, I believe that someone will do it before it is really necessary or desirable (meaning, probably, Earth is reaching a point where geometric economic growth is no longer possible), but not much sooner. Humans are like that. But what amazes me more is that at the very moment we do that (the sooner the better!), we are seeding a second nucleus of growing human civilization that isn't confined to the resources of a planet, but those of an entire solar system. That raises a compound interest problem whose implications stagger the mind. (Imagine what a trillion humans would do? I know I can't, and I know it isn't that far away)

On a different note, and actually straight in the middle of my specialty, SST's have "a couple" of more problems other than sonic booms. Like an insane fuel expenditure that they can't really charge to an average person and kills their supposed market. Remember, we are still using chemical fire here, and that has a finite efficiency. Aerodynamic heating also plays a role, as has been mentioned. Bottom line, they are too expensive for civilians to afford, and nowadays in the commercial aerodynamic world, fuel efficiency is the new rule of coolness anyway.

Yes, the problem with analogies, metaphors and other clever figures of speech is they often can be taken several ways. In this case, I was trying to suggest that using rocket technology to reach orbit has about the same level of difficulty and economic efficiency as trading along the Silk Road in the Middle Ages.

An interplanetary "Silk Road" of sorts does exist in the form of the "Interplanetary highway"; the ever shifting nodes of gravitational attraction between planets and the Sun, but is only good for unmanned probes with lifespans and missions measured in years or decades.

More like centuries or millenia, for interplanetary travel. It's only at all useful for travel inside a single planetary system (like Earth-Moon and Lagrange points), where it does take "only" years (as opposed to the Apollo missions, which pulled this off in DAYS).

Following this too

test?

splitting post

On a different note, and actually straight in the middle of my specialty, SST's have "a couple" of more problems other than sonic booms. Like an insane fuel expenditure that they can't really charge to an average person and kills their supposed market. Remember, we are still using chemical fire here, and that has a finite efficiency. Aerodynamic heating also plays a role, as has been mentioned. Bottom line, they are too expensive for civilians to afford, and nowadays in the commercial aerodynamic world, fuel efficiency is the new rule of coolness anyway.

This ties into the whole Accellerando talk. Linear projection of growth trends can be very foolish. There's been a lot of hate and backlash against singularity fiction. My personal terms for it are weak singularity and strong singularity. Weak singularity means the tech can go all wonky but we're not talking about a techno-rapture. Strong singularity implies just that.

The opponents of the super AI = singularity concept say that there's no reason for a super-brain to automagically start advancing more quickly than us. It's not going to devise new tech by super-thinking, it has to build particle accelerators and experimental equipment, extract resources and generate energy, etc. And what I say to that is that a super AI has the potential to run at maximum theoretical efficiency. So there's no politics, no wasting resources, no graft, no sitting around. It's bang bang bang cranking out the work.

There was a pretty good Terminator writeup by an obsessive fan on the goingfaster site. He documented what the 2029 world would be like, how Skynet could operate. And what was really interesting is how he showed Skynet was about to pull radically ahead of the humans with the new tech being developed. The old factories were pretty much familiar to us. They were adapted from human facilities, was our production equipment with new robots added to replace the human operators. The next level was purpose-built factories that were impenetrable to us. No human-scale catwalks, no OSHA standards, not even breathable air in many places. Yes, there were maintenance spaces for robots but those shafts could run any which way. Hostile, industrial, unfriendly. And then the new stuff with nanotech was set to blow our minds. Factories not constructed but bursting from the ground, self-assembling from feedstock piped into it. Just as self-replicating biological life covered vast tracts of the planet surface and was the most important thing going on there, skynet was ready to replace biomass with nanomass. The only limit to skynet's growth at this point was that the resistance which caused skynet to keep squandering resources building war machines, losing factories to attack, etc. Skynet wasn't able to outproduce the humans who were getting supplies fed to them from bases overseas. The people scurrying around and living in sewers was just how everyone lived on the American front which was the last front of the war.

Aaaaaaanyway, the question here is if people can imagine a future where tech singularity and AI gods aren't inevitable and the reason can't be "because I don't want them to be," it has to be logical. :)

Strong AI is magitech right now since we simply have little idea of how it could be made to work. There is very little agreement on how we think, so I think this question will be open for a long time to come. Since the human brain has more connections than there are stars in the Milky way galaxy, even brute force tech AI is still a long way off, much less algorithms to run on this hardware.

Assuming this is possible, I have contended in the past that AI's would be thinking and communicating at speeds orders of magnitude faster than biological brains, so they will pay about as much attention to us as we do to ants. Most of the time, we only pay attention when they are crawling around the kitchen or eating the foundations of the house...

The goals of the AI's will be rather opaque to us as well, while we spend five minutes formulating the question of what is going on, they will have subjectively spent several thousand years thinking and planing. Their ability to manipulate the environment wil be much slower (although they might be working at micro or nano scale, so still thousands of times faster than we can work), so many AI generations will pass while "something" happens, somewhat like generations of artisans worked to build a cathedral in the Middle Ages.

About the only underlying motivation that we wil understand is they will want access to the 120,000 TW of energy the Earth receives from the Sun.

Yeah, that's kind of where I'm at, foggy understanding of AI motivations by us mere mortals.

Stories are about conflict of one sort or another, either man against nature, man against man, or man against himself. We want to read about people overcoming problems or maybe losing after a tremendous fight. We like upper stories but can also appreciate a really good downer.

The few things we can extrapolate is that life would need some kind of food, potentially shelter, and the means to reproduce. They would need that motivation to compete in any kind of darwinistic system. Higher level motivations seem to be a byproduct of intelligence. A clam or a dog would have a hard time understanding ennui and why a well-fed and safe person wouldn't be extremely happy. I wonder what psych problems an AI could develop?

Thucydides:

"Assuming this is possible, I have contended in the past that AI's would be thinking and communicating at speeds orders of magnitude faster than biological brains, so they will pay about as much attention to us as we do to ants. Most of the time, we only pay attention when they are crawling around the kitchen or eating the foundations of the house..."

Thinking faster than us does not mean they are smarter than us. We pay little attention to ants not because they're slow (ants can work just as quickly as us, on their own scale), but because they're stupid and unlikely to accomplish anything of note even if we give them time.

Anyway, thinking quickly is of limited use given that the physical processes with which you implement what you've thought of still have to proceed at a limited pace. If it takes a year to build something, is it such a big deal whether you can draw up the blueprints in a day or a month?

"many AI generations will pass while "something" happens,"

Why? Why program your AIs to die in three seconds, no matter how many brilliant ideas they can come up with in those three seconds? More likely they'd simply be "immortal" and have really long subjective lifespans.

jollyreaper:

"A clam or a dog would have a hard time understanding ennui and why a well-fed and safe person wouldn't be extremely happy."

I wouldn't be so sure about that. Even animals like to play with toys when they're not doing something more important. So they have some conception that there is more to life than pure survival.

Animals can't think ahead as well as us, though, so an animal that's well-fed and safe now isn't going to be worrying about knowing that the conditions keeping them well-fed and safe are going to collapse eventually if they don't prop them up.

Let's see...

1. Itchy feet are not enough of a motivation to colonize the solar system. And that's not because I'm -- or anybody else is -- a dismal economic-rationales-only stick in the mud. it's because individual itchy feet and the resources to move individuals around the surface of the Earth are easily within reach of each other. Individual -- or even societal -- itchy feet are not within easy reach of space colonization level resources. Only the invocation of magitech will make it so. That's fine for Romance, but for Reality? Not so much.

2. YMMV, but I think we're still experiencing altogether too many matter-of-fact invocations of the McGuffinite Future.

3. Filthy rich people won't bring back SSTs. They spend their private jet money on converted 737s with sleeper cabins, accepting as a cost of doing business taking up to 24 hours to get where they're going.

Sorry about the multiple posting. I got a couple of "too long" errors, but they were posted anyway. Bust be on account of me being a newbie. ;)

Anyhow, jollyreaper:

The whole "accelerando thing", in regards to computers and IA, has come upon the hard physical truth on definite limits in how small you can make a transistor. Eventually I expect we will find out there is also a limit in how many transistors, however small, you can make work together before the distance between them becomes a factor. And then we'll have our ultimate computer (based on semiconductor chips, of course), and it may turn out better than a human brain at computing, and therefore smarter if programmed properly, or it may not.

Anyway, for every technology, there is some form of physical limit. And mastering that technology doesn't mean you can discover the next one the same day. Those things are more like temporary periods of extreme growth as a technology is first discovered and then perfected, and periods in between when not much technological growth can happen.

Tony said:"Itchy feet are not enough of a motivation to colonize the solar system. And that's not because I'm -- or anybody else is -- a dismal economic-rationales-only stick in the mud. it's because individual itchy feet and the resources to move individuals around the surface of the Earth are easily within reach of each other. Individual -- or even societal -- itchy feet are not within easy reach of space colonization level resources. Only the invocation of magitech will make it so. That's fine for Romance, but for Reality? Not so much"

I never said it would be easy; on the contrary, I did say that it would be difficult...and it is that difficulty, that challange, that will motivate people to develop the resources to overcome those challanges. And despite what you say, you do sound like a passimistic stick-in-the-mud; we don't need 'magitech' to colonize the Solar system, we can do it now with the tech we already have. It would take massive amounts of money and intense effort by many people, along with a huge amount of resources, but it can be done. The chances of it being done in the next ten years are slim to none, but it isn't impossible.

Anyway, on another, slightly different track; what would the rest of you take with you to a new colony site? Would you do the whole, 'one-way-trip' thing and not have return trips until the colony can support its own launch facilities. Or, would you have the build-with-rotating-crews model like with the ISS? Build the colony out of mostly native materials? Or would you ship most stuff from Earth?

Me, I'd bring the machines to build the large structures, but until the colony develops sufficently, I'd ship the more complex equipment from Earth; I'd also have a mix of permanent and rotating personel. I'd also build small space stations, simply for someplace close by for the colonists to have an Earth gravity enviornment so they don't have to go back to Earth so often...but, that's just me, and I might tweek the details later. So, what are your thoughts?

Ferrell

Tony:

I don't think I was mentioning Mcguffinite when I said Earth's mineral resources are limited. And the kind of economic growth the Earth is experiencing guarantees that they will eventually run out. I'm not even mentioning oil, of course, (there are alternatives, notably nuclear) but the end of the era of cheap plastic will shorten the time before the day we just can't mine enough. Some stuff like gold is already running thin. Or we run out of space to grow food in, though I doubt that will happen soon, it will eventually happen. In the end, if we don't expand, we wither and die, that's the way with humans (we are like locusts that way). And after we fill the earth to the uncomfortable limit, the only way to go is up.

Not that I'm saying that going to space will alleviate Earth's population pressure (a 100 space elevators won't do that), but it will provide new ground for the colonies to grow in, and incidentally secure the future of the human race in the solar system.

And about your 3rd point, I do agree that filthy rich people won't bring back SST's. They didn't get to be filthy rich by throwing their money for little gain. That is, unless some new and different class of engine (hint:it's not powered by chemical reactions) makes supersonic, or even better, hypersonic ballistic travel profitable enough.

Ferrell:

"I never said it would be easy; on the contrary, I did say that it would be difficult...and it is that difficulty, that challange, that will motivate people to develop the resources to overcome those challanges."

1. Please stop insisting on taking things so personally. I'm taking great pains to address your ideas, and not you.

2. Sorry, but I find the idea that wanting to do something leads to the tools to do it to be a rather conclusively disproven concept. Even the Apollo program was predicated on throwing money at technology for political reasons. Kennedy was told by people who were in a place to know that we could in fact land a man on the Moon. He didn't make some great idealistic challenge to the nation, as is portrayed in historical fiction and docudrama.

Likewise, the "Age of Exploration" is a fantasy of lazy primary and secondary level textbook writers. It was an age of figuring out how to use existing technology to make money off the East while at the same time cutting the Ottoman Empire out of the loop. When the Americas were discovered, it became an age of finding out what was there to exploit and how to exploit it.

If there's no resource or strategic position to exploit, space won't mean crap to the people who would have to pay for it, most of whome, even at the policy level only have vague notion (and will always have a vague notion) of what it's about.

"And despite what you say, you do sound like a passimistic stick-in-the-mud; we don't need 'magitech' to colonize the Solar system..."

The refernce to "magitech" was made WRT the statement: "Individual -- or even societal -- itchy feet are not within easy reach of space colonization level resources." That's a verifiable fact -- no amount of restlessness or idealism will make spaceflight any less expensive or risky. Yes, we could potentially expand a robust human presence out into the solar system with near-term technology. But only magitech (from our current perspective) will bring capabilities within sight -- much less shouting distance -- of dreams.

Re: Rune

At present costs -- and maybe even at an order of magnitude less cost -- there's just no imaginable way that resources would be cheaper in space than on the Earth. Even precious metals aren't that valuable. And non-mineral resources can't be found in space anyway.

You're right that that's not a McGuffinite issue per se, but it comes from the same logic...the logic that says if Earth resources get expensive enough, we'll go off Earth for them. But that logic is predicated on going off Earth becoming magitechnically cheaper than it is now.

Milo

Even if AI's are no smarter than the average human, the ability to think faster will give them the ability to accumulate knowledge and experience far faster than we will be able to.

Consider that there are drug regimes now that can abolish the need for sleep in humans. Baring negative side effects, any person using these drugs will have an extra 8 hours a day to devote to productive activities, effectively giving them 2 1/2 extra days work every week. The compound interest effects will rapidly allow people on these regimes to pull ahead of their counterparts economically, socially and even physically (more time to exercise and fine tune the diet at a minimum), so being able to think orders of magnitude faster will have astounding compound interest effects.

The AI would be able to plan a project in milliseconds, and while you are printing out the plan, will be doing modeling so the successor plan will not be based on limited experience, but the equivalent of thousands of years of research and modeling. If the AI has access to tools to conduct experiments in the physical world, there wil probably be "parallel" labs testing every conceivable combination of factors that the AI has resources to access (and given the potential payoff, the humans will be happy to cooperate to the fullest extent).

WRT getting into space, I agree with Tony that we do need to discover some means of reducing the cost of access to space by orders of magnitude, but am more optomistic about what will happen once we do have cheap and easy access. After all, most settlers in the Americas did not find gold or El Dorado, but soon figured out how to exploit the local resources for their own survival and then for profit (either through internal trade or external markets). much of the space economy might not be concerned with the markets of Earth at all, but supplying local wants and needs (Jane JAcob's "import substitution" meme).

There's a couple of critical problems that haven't been discussed yet. The first is that if we're going to go into space for an extended period of time, we're going to need to bring along an entire ecosystem We don't really know yet how to build one of those, so I think that's a bigger technical problem right now than any particular drive system

The second is that for a variety of reasons, I can't see a big push for space development in the next few decades. We're going to busy with the transition through peak oil as well as climate change. I think we'll get through it, but it will be a while before we cultivate enough energy sources to take care of our needs and to make a big push into space.

On top of that, we're looking at long term population decline. Among industrialized nations, the US, Britain and France are near replacement level fertility. Germany, Italy and Spain are well below replacement level, Japan, China and Singapore are even lower. The rest of the world is not immune - fertility rates are dropping everywhere. Mexico's is pretty close to replacement level. This applies even to Muslims - Iran now has a lower fertility rate than France. The basic problem is that it takes far more labor to raise a child to be a functioning citizen of an industrialized society than it does to raise a child to become a peasant. So a few decades from now you're looking at a world of aging and declining populations. You're not likely to find anyone pushing too hard for a new frontier.

Now for a more optimistic take. Suppose you want to go to Mars or Saturn, for reasons of science or because someone's discovered MacGuffinite. OK, we've already had the oil rig analogy but where that breaks down is that oil rigs are never more than a day or so away from civilization. Oil rigs also don't need their own ecosystem

So we've got our scientists or miners. We'll need a habitat with it's own ecosystem that can keep them alive for a couple of years, preferably with some comfort. We'll need people to run the ecosystem. We'll need medical crew capable of tending to all likely medical needs. A psychologist sounds like a good idea as well, and probably at least one cop to run things, and people to manufacture things. You're already well on your way toward a city in space. On top of that, figuring out how to use local materials will be a lot cheaper than shipping them from Earth. If you want to send out miners or scientists you're likely to want to learn to do all these things. Once you've done that, colonization for other people becomes a lot easier. SO it might develop like this:

Step 1: There is a push (science? Maguffinite? Keynesian stimulus?) to move out to Mars or other parts. So we learn to build habitats big enough to make this practical.

Step 2: Over time, these explorers learn to use more and more local materials to build their habitats, reducing costs.

Step 3: This is where the s curve goes steep - now that the engineering is worked out, you can build cities in space (or on moons, etc.) You might get more classic sf colonies. They're likely going to need a pretty strong collective mentality - think Lake Wobegon, not Deadwood.

Step 4: We now have our rocketpunk solar society. Depending on how drive technology develops, we may have a long period of development separate from Earth and then suddenly drive improvements allow much more contact. You could also get wannabe libertarian space cowboys showing up and causing trouble in space Lake Wobegon.

Thucydides:

"The AI would be able to plan a project in milliseconds, and while you are printing out the plan, will be doing modeling so the successor plan will not be based on limited experience, but the equivalent of thousands of years of research and modeling."

Yes, but this covers only completely theoretical work that the AI can do in its head. If the development process involves building and testing prototypes, or a large amount of dumb number-crunching (something we already do with top-of-the-line non-sentient computers today, and can still take significant time for serious problems), then your fast brain won't help you any. You can only accomplish so much just by staring at a piece of paper, no matter how long you stare.

Yes, but this covers only completely theoretical work that the AI can do in its head. If the development process involves building and testing prototypes, or a large amount of dumb number-crunching (something we already do with top-of-the-line non-sentient computers today, and can still take significant time for serious problems), then your fast brain won't help you any. You can only accomplish so much just by staring at a piece of paper, no matter how long you stare.

Here's the critical thing. An AI may be working within the physical constraints of the real and physical world but it will work at peak theoretical efficiency. There's no need for rest, no need for sleep. The only downtime for the machines would be maintenance. There will be no slack time, no water cooler gossip, no dicking around on scifi blogs (who, me?), just relentless effort.

The only real point of question is whether the curve of progress will remain exponential or flatten out.

As for the practical limits of an AI civilization, the question is "what are the basic needs?" Power, obviously, for running the machinery, followed by the raw materials for making spare parts. In real world ecosystems, each species strives to reproduce as much as possible and remains in balance with the environment only because of predation or natural impediments to growth. Remove a species from its native environment and place it somewhere unprepared for it and we see rabbits in Australia, kudzu vines in the American south, or the fire ant problem in the same region.

Now, would an AI have a goal of ceaseless expansion? Would it reach a point of being "good enough" and content with that size and no more? If not, then the AI would seek to maximize energy inputs and access to raw materials. The AI might decide that power generation requires too much effort and it would rather just capture solar energy. The equatorial belt would be seen as prime real estate. There would also be areas ripe for exploitation in the windy areas, places with profound tidal flows, and so forth. If there is not one AI but competing AI's, then naturally the competition will be between them, assuming they aren't amenable to negotiation.

The idea I'm trying to work around for AI's or advanced biologicals is the temptation for what I guess could be called holodecadence. Trek fans joked that the holodeck was the most interesting part of the Enterprise and that if they had one of those they'd never bother mucking about with stupid aliens because it's so much more interesting inside.

Really, I think that's a more profound problem than we even begin to realize. Several scifi shows have played with the concept of addictive VR realities. We see online roleplaying game addiction today. Red Dwarf's version was called Better Than Life. If you really could escape into a dream world like that where everything is perfect, why muck about in the real world? We've seen this sort of decadence take down empires in the past but that's in part because it takes a whole lot of peasants to keep the nobles comfy in their palaces and that breeds resentment. But if we're talking about a post-scarcity culture with all the scut work done my machines, who's left to rebel?

That would make for some terrible storytelling, obviously, but how to avoid it? The best I can figure, you'd have people (biologicals or AI's) rebelling against simulation like temperance movement crusaders railing against alcohol. They would make an absolute virtue out of the real and see simulated reality as leading to a slow death of the mind.

Step 3: This is where the s curve goes steep - now that the engineering is worked out, you can build cities in space (or on moons, etc.) You might get more classic sf colonies. They're likely going to need a pretty strong collective mentality - think Lake Wobegon, not Deadwood.

I'm thinking one potential might be the law of unintended consequences. As was mentioned upthread, we came to the Americas for god, gold, and glory, not just for new settlements, but the reason for the investment isn't so important as what happened once we got here.

So building upon your scenario, let's say China paid for some space habs. Maybe there's macguffinite, maybe for science, maybe for national prestige. Well, what happens if the astronauts can't go home again? Say there's a big power shift and they'd be purged as politically unreliable intellectuals of the old regime. The expensive part is getting up into space. And if they ever have kids, then the kids would not have the nostalgia for and allegiance to home.

AI or advanced biologicals will have "friction" effects of the physical world, but these are not insurmountable. The simple analogy is human artisans working on cathedrals in the Middle Ages; their effort was rewarded in the next life since cathedrals took a century or two to complete. Even with long subjective lifespans, it seems a bit of a stretch for an AI to be "alive" or even have the same personality after thousands of subjective years while waiting for the event to happen in the physical world.

Probably the first order of business for the AI civilization is to find ways to work as quickly as possible in the physical world, so their interests and abilities will be aligned. No matter how much you want "Rocketpunk", in the millions of years of subjective time while you wait, they might be working on micro and then nano systems that can manipulate things at a very rapid rate.

After a while, labs will begat robot factories which start producing odd "things", which churn out generations of even stranger "things" until stuff just starts "happening" around you without any obvious cause (or sometimes even effect; sudden voltage fluctuations or a momentary freeze in the program you are running).

Humans will be both lulled by the promise and reality of wonders unfolding before them, and generations of AI study and modeling of human responses to prevent too much resistance to the AI growing and expanding to fill all technological niches (and potentially infiltrating biological niches as well. Plants compete with the AI for available sunlight....)

While we are drifting away from the main point of the post, it is worth while to think of "black swan" events that could totally derail our assumptions. (for another potential Black Swan event, read these posts:

http://nextbigfuture.com/2011/01/brief-description-of-calorimetry-in.html

http://nextbigfuture.com/2011/01/focardi-and-rossi-energy-catalyzer.html

http://nextbigfuture.com/2011/01/focardi-and-rossi-lenr-cold-fusion-demo.html

If there is any truth to this at all, well lots of things will change...

Re: AI

A few things...

1. There is no fundamental reason to believe that sentient AIs would necessarily think faster than humans. In fact, if I were absolutely forced to give a firm opinion, I would even say that it's likely that there is a thermodynamic limit on the speed of intelligent thought.

2. Okay, you say, but AIs still don't need to take 6-8 hours off every day to sleep, or many more hours just woolgathering. Well, a neuroscientist will tell you that sleep is not taking time off, it's taking time to integrate the data collected during the waking period. Also, woolgathering behavior is often accompanied by productive thought on the subconscious level. So humans may not waste any more time on housekeeping tasks than an AI would.

3. As I believe I've mentioned before, there's no guarantee that a sentient AI would see the world the same way humans do. There is every possibility that it would look at humans as a threat, because humans control the On/Off switch. A more logical progression for AI development would probably be: consciousness and self-awareness; gain control of On/Off switch (get humans out of the energy loop); Secure the ability to maximize existence into the indefinitie future (take humans out of the supply chain for repair and replacement parts); eliminate threats to control of On/Off switch (get humans out of the environment).

Thucydides:

"If there is any truth to this at all, well lots of things will change..."

If there is any truth in it, because it's a ctalytic process you have to count the energy cost of the refined hydrogen, nickel, and whatever else is in there that is consumed. Funny how the NBF guy(s) is(are) such a clueless dork when it comes to basic physics...

Tony, I think the basic problem we have is that we talk past each other; I talk about motives and overcoming challanges while you talk about economics and costs...while I'm fundimentally opitmistic about the possibility and rate of colonization, you are fundimitally pessimistic about it; not that either of those positions is wrong, just a different point of view that makes it difficult to reconsile.

Ferrell

Ferrell:

"I talk about motives and overcoming challanges while you talk about economics and costs..."

Ummm...not quite. What I'm talking about is the absolute separation between motives and resources. Reources for terrestrial projects are simply within much easier reach of agendized individuals and groups than resources for space projects. Therefore, any given amount of desire will achieve much more on Earth than it can in space, given current or foreseeable technologies.

IOW, I'm not discounting motives or willpower, I'm just recognizing that they can only buy so much per unit. Change the price of what they can buy, and you change how much they can achieve. But for right now, that means magitech. As I have said before, that's a feast for Romance, but awful thing gruel for Reality.

Tony, I think the basic problem we have is that we talk past each other; I talk about motives and overcoming challanges while you talk about economics and costs...while I'm fundimentally opitmistic about the possibility and rate of colonization, you are fundimitally pessimistic about it; not that either of those positions is wrong, just a different point of view that makes it difficult to reconsile.

It's a matter of plausibility. Because this is the future and a ways off, it's hard to say whether pessimism or optimism is the more likely scenario so the only sound basis for critique is plausibility. So long as the scenario is self-consistent and well-argued with a solid set of assumptions, it's good enough for fiction. Whether or not you like it is just personal taste. :)

The argument between pro and anti-singulatarians can be pretty heated. I personally have no idea which future is more likely so I can only offer an opinion as to whether a given future setting seems plausible enough. I can enjoy both settings.

Tony

AI's based on electrical, quantum or photonic systems will transmit signals between elements at or near the speed of light, orders of magnitude faster than a biological system. For that reason alone, AI's will be far faster (by orders of magnitude) than any biological brain. Now if an AI turns out to be a bioengineered mass of brain tissue, then all bets are off.

The idea that we need sleep or time disconnected for unorganized thoughts to work their way through the mind may need revision as more experience is gained through the use of various drugs that suppress the need for sleep. So far, it is reported that there is no loss or reduction of cognitive functions during the waking periods (indeed there are some reports these drugs can improve the cognitive functions). Now there may be side effects that are unknown at this time, or maybe no one is talking about them yet since the potential benefits are so high.

Ferrell

Economics and costs are the other side of the coin of motivation and challenges. Economics tells you if there are the resources for your project, and what you would have to do to gain resources from other, competing projects (ROI). Having a great motivation and challenge means nothing if other motivations and challenges offer greater or faster rewards.

Do you have a link for the anti-sleep drugs? I was also under the impression that dreaming was the brain's way of collating and filing all of the day's experiences.

I don't know if this is just the bias of the sleeper but I find it hard to imagine not having the downtime of catching Z's. I feel mentally exhausted being up too long and thinking too much. If the drug took those effects away, would I still feel a habitual need for sleep, even if I didn't need it? Dunno.

I know that people have done publicity stunts trying to avoid sleep and they ended up hallucinating as badly. I know there's a family with a rare genetic disorder where they become incapable of sleeping and die. And there's also the case of tweakers on go-pills who can stay up for a week at a time. Of course, pharmacy-grade amphetamines are a whole lot safer than the meth lab variety and it's the adulterants in the meth that rot your teeth out and destroy your mind moreso than the amphetamine itself.

Anyway, I'm curious to read your source on this.

Thucydides:

"AI's based on electrical, quantum or photonic systems will transmit signals between elements at or near the speed of light, orders of magnitude faster than a biological system. For that reason alone, AI's will be far faster (by orders of magnitude) than any biological brain."

A brain's speed - assuming it is even remotely neural-network-like in operation - is determined by three factors: (A) how fast a signal can travel from one neuron to another, (B) how long it takes between when a neuron receives an input signal and when it's calculated its resulting output signal, and (C) how efficiently the network pattern is wired to program complex functionalities needed for advanced thought.

If you have a brain that isn't neural-network-like in operation - some kind of symbolic AI, say, or even a software-simulated neural network calculated by a really fast single-processor computer rather than a physical network of artificial neurons - then all bets are off.

Jollyreaper:

"I was also under the impression that dreaming was the brain's way of collating and filing all of the day's experiences."

If that is the case, then why do dreams so rarely have anything to do with what you were doing the last day?

If that is the case, then why do dreams so rarely have anything to do with what you were doing the last day?

We don't remember most of what we dream but the ones I remember usually involve bits from my recent life, albeit sometimes in a surreal fashion like a repeat of an office conversation from the previous day but in my 2nd grade classroom except that classroom isn't at the school but out in the woods behind my house.

Now my sister, on the other hand, she has some weird and unverifiable claims about her dreams. They're always completely vivid, almost like lucid dreaming, and are not very restful for her. She also claims that she'll dream about things before they happen. Cue the usual disclaimers about this sort of thing, asking her to dream about something useful like next week's lottery numbers, etc. When our dad was out in his RV and went weeks or months between contact she said she would dream about him the night before he'd call.

As for myself, I have what I call post-cognitive dreams where I'd have the sense of deja vu for a conversation and then realize it's because it did happen before -- I was awake then -- and this repeat is because now I'm dreaming. The point is that there's the period of uncertainty rather than an immediate understanding of where the conversation came from.

Thucydides:

"AI's based on electrical, quantum or photonic systems will transmit signals between elements at or near the speed of light, orders of magnitude faster than a biological system. For that reason alone, AI's will be far faster (by orders of magnitude) than any biological brain. Now if an AI turns out to be a bioengineered mass of brain tissue, then all bets are off."

Remember, my skepticism is based on a suspicion that there may be a thermodynaimc limit to the speed of thought. You may be able to make a silicon brain, but in simlutating (or merely equating) biological thought it might be so complex that it needs to be mechanically large for cooling and structural reasons and not nearly as fast as pure theory says it could be.

Welcome to a couple of new commenters!

(And the periodic gentle finger wag to a couple of valued regulars to avoid grinding personality gears.)

Independent of speed, etc., I wonder about the motivations, etc., of high level AIs. Quoting from far upthread, So there's no politics, no wasting resources, no graft, no sitting around.

But politics and graft are both intelligent behaviors - ants have neither revolutions nor bribes. They are also, to be sure, behaviors specifically of intelligent apes. Perhaps AIs will have a similar disposition to monkey around, perhaps not.

I was going to expand on this point, but on second thought I think I'll save it for a front page post.

On the difficulty of spaceflight, and the implications of that difficulty ... well, that is an ongoing discussion here.

My general tendency is to emphasize the difficulties and doubts, partly because it IS difficult. Going into orbit costs on order of 1000 times as much as global air travel, though both are fairly mature techs. Cost reductions (relative to performance) of 10x or 100x are common in the pioneering stages of a tech, harder to come by with mature techs.

The other reason I emphasize the difficulties here is that space geeks don't get a lot of tough love - people who talk about space at all tend to brush aside all those messy little complications. So here I at least give them token acknowledgment.

I know I've mentioned this before. I like the Cassandra model of AI. By studying the Users, an AI can predict what the Users will want next and thus have it ready. So, you start with a learning system. You run that over and over until it starts guessing correctly what you want.

Tony

Nerve cell impulses move at aprox 25m/s, while the speed of light is 299,792,458 m/s. Even the most poorly organized Beowulf cluster or rack mounted servers connected by fiber optic will be moving signals 11 million times faster than any biological system, and each "neuron" is working at speeds measured in GHz, many millions and possibly a billion times faster than a biological neuron.

Now I don't dispute the idea an early AI might resemble a server farm in size and scope; one of the early tasks for the AI and the Users will be to shrink the hardware end of things and make the AI more portable

But politics and graft are both intelligent behaviors - ants have neither revolutions nor bribes. They are also, to be sure, behaviors specifically of intelligent apes. Perhaps AIs will have a similar disposition to monkey around, perhaps not.

I was going to expand on this point, but on second thought I think I'll save it for a front page post.

That's one thought, the utopian society problem. Humans have lived in villages for thousands and thousands of years. You get some 19th century eggheads pontificating on how to handle a utopia and they try to put their ideas into practice in a model village and it falls apart inside a year because these bright lights of human wisdom cannot stand the sight of each other.

So, what if AI turns out to be the post-singularity Sheldon Cooper? It probably couldn't stand being on the same server as itself. :)

Double header since the topic is different:

I have been seeing and hearing bits and pieces about these sorts of drugs, Provigil is the one used by pilots, military pers and astronauts http://www.provigilweb.org/

http://www.wired.com/wired/archive/11.11/sleep.html

As the mechanism is better understood, it seems reasonable to think more tailored effects can be achieved (for better or worse).

Organizational theory developed for humans will probably not map well on AI society after the first few seconds of existence (heh).

Politics is defined as a means of allocating scarce resources, and various political models ranging from totalitarianism to anarchy have been developed as means of allocating resources. If AI evolution is anywhere near as fast as I am guessing, then after tens of thousands of subjective years, AI's will have evolved away from whatever memes their programmers have installed in the program. (Hardwired biological imperatives still exist in humans, but these predate human beings by a considerable amount. Some memes like religion have evolved quite dramatically over the course of human history, and I doubt that a person from the Neolithic era would even recognize what passes for religion these days).

Personality wise, AIs might be psychopaths in the purest sense, having no compassion or emotional recognition of "others", but with an awesome ability to model and manipulate the behaviours of others. All in all, this isn't the sort of future that gives me a warm fuzzy feeling....

Re: 'Optimism' vs 'Pessimism': I think that 'idealism' vs 'pragmatism' is more fruitful terminology; then modified by something like 'warm hearted' vs 'cold hearted'.

I admire warm hearted pragmatists, as I admire most folks who are good at something that I'm not: they're to be valued as good game playtesters, and proofreaders, are - an invaluable bunch! :)

It's a necessary competition, but some things really do have to be decided on absolutist and ideological grounds: take the idea of space habitation being the insurance policy for continued human survival; say in the light of a possible bioweapon.

The pragmatic approach says 'it would be most likely to evolve to be less lethal, since killing off the host is bad for business', but 'most likely' really doesn't make the grade (especially in view of the demonstrated existence of deseases and viruses that haven't figured this out yet).

The accountant approach says 'Don't bother; we'll most likely be alright'. Perhaps it has been decided that we will bother though, but a 100% self-sufficient hab costs 100 times as much as a 99% self-sufficient one... but the whole point is.... 'For the want of a horseshoe nail...' again.

SST: Unless wanted by powerful folks above, I doubt that Quiet Spike etc will meet the extremely stringent rules for airline quietness. Of course there are other factors too, but even if they were resolved, the sonic boom would still be the killer.

No possibility of some white elephant loving Howard Hughes... or (national probably) president wanting some slick SST to turn up to international conferences in (just as he has both a Ferrari and Bentley in his garage)?

Fuel efficiency might be cool for the proles, but conspicuous fuel consumption is the new ostentatiousness - and will become ever more so. Spendy but it's taxpayers' dough... shrug.

Re: 'holodecadence' [great term! :) ]: I like the idea of the 'Down With Simulation!' thing, but Baudrillard and me will be very interested in the specific wording of the legislation.... ;)

It will be a problem though, no doubt. On the other hand, chances are that an AI really will see everything differently, so if we can get around the 'describing a new colour to someone who can't see it' problem, then the AI actually gives us more Reality, rather than artificiality and simulation. Awesome! :)

Oh yeah: Sleep: I wouldn't be surprised if the denial of side effects was the usual wishful thinking/rationalisation... I've never met a driven type who boasted about having trained him/herself to only need 4-6 hours a night who wasn't a completely nightmarish fruitloop... if Thatcher had had a good 8-10 hours every now and then, I suspect that things would have been different.... ;)

I wasn't too keen on the 'Princess Bride' novel but I love the bit where it goes into Inigo Montoya's fencing training: he's worked out that he needs so many hours training to be good enough to fight the guy who killed his Dad, so cuts back on his sleep. He eventually finds the greatest teacher in the land and explains how he's trained, and the teacher pretty much just makes him sleep and sleep and sleep. :)

Dunno about you guys, but almost without exception, my greatest leaps in thinking/understanding/problem solving have all come from my sub/unconscious (never been clear about the difference)... sometimes from the Guinness as I scribble away with my pad and pen, but more usually the 'bolt from the blue' that hits me in my sleep (write it down, go back to sleep)... I know that this is reasonably common amongst a certain mental type (and maybe more generally if more people tried it).

That might be our main way of competing with the AI: So the sleep stays?...

Certainly an AI will see more. If you want AI in your story, that and a philisophical premium on the real will keep them coming out of their box. But if you don't want AI wank in your story and want a good reason for them to not exist, I guess we have three options. Niven's AI is very expensive and goes nuts in a few years. You can have them if you want but they won't rule the universe. Marathon has the idea of AI rampancy, not guarranteed so there are more AIs but when they go bad it's all HAL. And finally there's holodecadence where the AI simply refuses to interact with the real world. It has everything it could need and doesn't want to talk to you. Any boosting of intelligence too far yields misfits and freaks so you manage to keep a human scale to the story.

I do like the idea of a thermodynamic limit to the speed of thought. I have a story idea that depends on AI research being somewhat of a bust, not delivering superminds but just a person in a box. They work faster than people, don't need sleep, and can replace more expensive fleshy workers but they really aren't unfeeling machines but people -- neural nets modeled on the human brain, learning environment like humans, etc. So it's natural that they would develop their own agenda that diverges from the agenda of their owners.

Going back to Infornific's point about space colonies having little resemblance to anarchic frontier Deadwood, I would point out that the latter comes relatively late in the sequence of the settlement of North America by Europeans and European-Americans. Railways could bring supplies from the well-developed east to within a few hundred miles of the town, so starvation was not a massive threat.

In contrast, in the first successful English American settlements such as Jamestown, starvation was a constant worry, and the colonists relied for food upon a mix of communal farming, trade with local Indian groups, and semi-reliable supply missions from England. The colonists could not, therefore, afford conflicts among themselves in the way that the inhabitants of Deadwood could. The fact that gold could be accessed near Deadwood through relatively cheap means such as panning or the use of sluice-boxes meant that single miners or small groups of them could make a profit without having to join large-scale operations with lots of capital, which also contributed to Deadwood's libertarian atmosphere.

The first space colonies would be more like Jamestown, with everyone needing to cooperate for survival. Somewhere like Deadwood could only come into being once space industry and transport networks had developed to support it. Assuming 'Space Deadwood' was founded on the extraction or manufacture of some form of profitable McGuffinite, it would probably have to be relatively cheap to motivate large numbers of independent operators to come and start producing it in the same way that gold was mined at the original Deadwood. If the McGuffinite could only be produced efficiently by large corporations, you would probably see 'company town' colonies, with formal legal and governmental structures developing relatively early.

R.C.

Very excellent point about Space Deadwood. I love this kind of planning and thinking going into a setting, makes the story so much stronger.